Learn what artificial intelligence (AI), elements of intelligence, and sub-disciplines of AI are, such as machine learning, deep learning, NLP, and more.

Definition and Subfields of AI

What Is Artificial Intelligence?

Computer networking systems have improved human lifestyles by providing different types of devices and devices that reduce human physical and mental effort to perform various tasks. Artificial intelligence is the next step in this process to make it more effective by applying logical, analytical and productive skills to this task.

This tutorial explains what artificial intelligence is

and its definitions and components through various examples. We will also

explore the difference between human and machine intelligence.

What is Artificial Intelligence (AI)?

There are many different technical definitions that

can be used to describe artificial intelligence, but they are all very complex

and confusing. For better understanding, let me elaborate the definition in

simple words.

Humans are considered the most intelligent species on

the planet because they can solve any problem and analyze big data with skills

such as analytical thinking, logical reasoning, statistical knowledge,

mathematical or computational intelligence.

With all these combinations of technologies in mind,

artificial intelligence has been developed for machines and robots that give

them the ability to solve complex problems similar to what humans can do.

Artificial intelligence is applied in all fields

including medicine, automotive, everyday life applications, electronics,

telecommunications and computer networking systems.

So, technically related to computer networks, AI can

be defined as computer devices and networking systems that can accurately

understand raw data, gather useful information from that data, and then use

these results to arrive at a final solution. Assign problems with a flexible

approach and easily adaptable solutions.

Element of Artificial intelligence

1) Reasoning: A procedure that can

provide basic criteria and guidance for making judgments, predictions and

decisions in any matter.

Inferences can be of two types, one is generalized

inferences that are usually based on observed occurrences and statements. In

this case, the conclusion can sometimes be wrong. The other is logical

reasoning, which is based on facts, figures, and specific statements and

specific, stated and observed events. Therefore, the conclusion in this case is

correct and logical.

2) Learning: The act of acquiring

knowledge and skills from a variety of sources, including books, real life

events, experiences, and taught by some experts. Learning improves his

knowledge in areas he does not know.

The ability to learn shows that not only humans, but

some animals and artificial intelligence systems have this skill.

Learning is of different types:

Learning to speak is based on a process in which a

teacher gives a lecture and then auditory students hear it, memorize it, and

use it to gain knowledge.

Linear learning is based on memorizing a series of

events that a person has experienced and learned.

Observational learning refers to learning by observing

the behavior and facial expressions of living things, such as other people or

animals. For example, young children learn to speak by imitating their parents.

Perceptual learning is based on learning to memorize

by identifying and classifying visual objects.

Relational learning is based on an effort to learn

from past thoughts and mistakes and learn them on the fly.

Spatial learning means learning from visual materials

such as images, videos, colors, maps, movies, etc., which will help people

create images they like whenever they need it for future reference.

3) Troubleshooting: This is the process of

determining the cause of the problem and figuring out how to fix it. This is

done by analyzing a problem, making a decision, and then finding two or more

solutions to arrive at a final and most appropriate solution to the problem.

The final motto here is to find the best solution

among the available solutions to achieve the best troubleshooting results in

the least amount of time.

4) Perception: The phenomenon of

obtaining, inferring, selecting, and systematizing useful data from raw input.

For humans, perception is derived from the experience

of the environment, the sense organs, and the conditions of the situation.

However, logically in relation to artificial intelligence perception, in

relation to data, it is obtained by artificial sensor mechanisms.

5) Linguistic intelligence: The phenomenon of the

ability to develop, grasp, read, and write speech in other languages. It is a

fundamental component of the way two or more individuals communicate and is

necessary for analysis and logical understanding.

The difference between human and machine Artificial intelligence

The following explains the difference.

1) We have described above the

components of human intelligence on the grounds that humans perform different

types of complex tasks and solve different kinds of unique problems in

different situations.

2) Humans, like humans, develop

machines that are intelligent, and provide nearly as good results for complex

problems as humans.

3) Humans segment data into visual and

auditory patterns, past contexts, and situational events, whereas artificial

intelligence machines recognize and process problems based on predefined rules

and backlog data.

4) Humans memorize data from the past,

learn it, and store it in the brain to remember it, but machines search

algorithms to find data from the past.

5) Linguistic intelligence also allows

humans to recognize distorted images and shapes, missing speech, data, and

image patterns. However, machines do not have this intelligence and use

computer learning methodologies and deep learning processes that include

various algorithms to achieve the desired results.

6) Humans always follow their

instincts, visions, experiences, situations, surrounding information, visual

and raw data, and what some teacher or elder has been taught to analyze and

solve any problem and produce effective and meaningful results. any problem.

On the other hand, artificial intelligence machines at

all levels deploy different algorithms, predefined steps, backlog data, and

machine learning to achieve useful results.

7) The process followed by machines is

complex and requires many procedures, but it provides the best results when you

need to analyze large sources of complex data and precisely perform unique

tasks in different disciplines in the same amount of time. accurate and within

the given time

The error rate for these machines is much lower than

for humans.

Subfields of Artificial Intelligence

Subfields of Artificial Intelligence

1) Machine Learning (ML)

Machine learning is a feature of artificial

intelligence that gives computers the ability to automatically collect data and

learn from the experience of problems or cases that have arisen, rather than

being specifically programmed to perform a given task or task.

Machine learning emphasizes the growth of algorithms

that can scrutinize data and make predictions. Its main use is in the medical

industry where it is used for disease diagnosis, medical scan interpretation,

etc.

It is a subcategory of pattern recognition machine

learning. It can be described as the automatic recognition of blueprints from

raw data using computer algorithms.

A pattern can be a continuous data series used to

predict a set of events and trends, specific characteristics of image features

to identify objects, repetitive combinations of words and sentences for

language support, or it can be specific data. A collection of behaviors of

people in any network that can indicate social activity and many more.

(i) Data Acquisition and Detection: This includes the

acquisition of raw data such as physical variables and the measurement of

frequency, bandwidth, resolution, etc.; There are two types of data: training

data and training data.

Training data is data that the system classifies by

applying clusters for which no labeling of the data set is provided. The

training data has a well-labeled dataset for use directly with the classifier.

(ii) Pre-processing of input data : This includes

filtering out unwanted data such as noise from the input source and is done

through signal processing. This step also performs filtering of existing

patterns in the input data for further reference.

(iii) Feature extraction : In order to find the

matching pattern required by the characteristics, various algorithms are

performed like the pattern matching algorithm.

(iv) Classification :Classes are assigned to patterns

based on the outputs of the algorithms performed and the various models trained

to obtain matching patterns.

(v) Post-processing : Here you will see the final

output and you can be sure that the results achieved are most likely what you

need.

Model for pattern recognition:

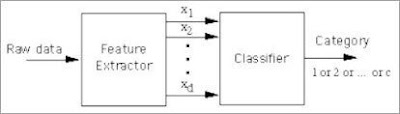

As shown in the figure above, feature extractors

derive features from input raw data such as audio, image, video, sound, etc.

Now the classifier takes x as input, and class 1,

class 2… Assign different categories to input values such as Class C.

Depending on the data class, further recognition and analysis of patterns is

performed.

Example of triangular shape recognition with this

model:

Pattern recognition is used in identification and

authentication processors such as voice-based recognition and facial

authentication, defense systems for target recognition and navigation guidance,

and in the automotive industry.

2) Deep Learning (DP)

It is a process in which a machine learns by

processing and analyzing input data in several ways until it finds one desired

output. Also called self-learning of machines.

Machines run a variety of arbitrary programs and

algorithms to map input raw sequences of input data to outputs. By deploying

various algorithms such as neuroevolution and other approaches such as gradient

descent in neural topologies, the output y finally assumes that x and y are

correlated in the unknown input function f(x). .

Interestingly, the role of the neural network is to

find the correct f function.

Deep learning witnesses a database of all possible

human traits and behaviors and performs supervised learning. This process

includes:

Detection of different types of human emotions and

signs.

Identifies people and animals by images, such as

specific symbols, marks, or features.

Recognize and memorize another speaker's voice.

Convert video and voice to text data.

Identification of right or wrong gestures,

classification of spam items and cases of fraud (e.g. alleging fraud).

All other characteristics, including those mentioned

above, are used to prepare artificial neural networks through deep learning.

Predictive analytics: After collecting and training

vast datasets, we cluster similar datasets by accessing a set of available

models, such as comparing a set of similar types of speech, images, or

documents.

Now that we have done classification and clustering of

the dataset, we will approach the prediction of future events based on the

rationale of the current event case by establishing a correlation between the

two data sets. Remember that predictive decisions and approaches are

time-limited.

The only thing to keep in mind when making predictions

is that the output must be meaningful and logical to some degree.

Through iterative takes and self-analysis, a solution

to the machine's problem can be obtained. An example of deep learning is speech

recognition in cell phones, which allows smartphones to understand different

kinds of accents in a speaker and translate them into meaningful speech.

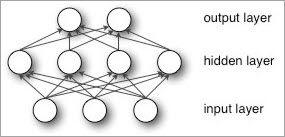

3) Neural Networks

Neural networks are the brains of artificial

intelligence. They are computer systems that replicate the neural connections

of the human brain. The artificial corresponding neurons in the brain are known

as perceptrons.

Stacks of various perceptrons are combined to create

artificial neural networks of machines. Before providing the desired output,

the neural network processes various training examples to gain knowledge.

This process of analyzing data using a variety of

learning models provides solutions to many related queries that were previously

unanswered.

Deep learning, as it relates to neural networks, can

unfold multiple layers of hidden data, including the output layers of complex

problems, and is an assistant in subfields such as speech recognition, natural

language processing, and computer vision.

Types of neural networks

Early types of neural networks consisted of one input

and one output, and only consisted of at most one hidden layer or a single

layer of perceptrons.

Deep neural networks consist of two or more hidden

layers between the input and output layers. Therefore, deep learning processes

are required to unfold hidden layers of data units.

In deep learning of neural networks, each layer is

adept at a unique set of properties based on the output features of the

previous layer. The more we get into the neural network, the more nodes gain

the ability to recognize more complex properties as they predict and recombine

the outputs of all previous layers to produce a clearer final output.

This whole process is called the functional layer.

Also referred to as a hierarchy of complex, intangible data sets. It enhances

the capabilities of deep neural networks where very large, wide-dimensional

data units with billions of constraints will be subjected to linear and

non-linear functions.

The main problem machine intelligence is struggling to

solve is processing and managing the unlabeled and unstructured data of the

world spread across all sectors and countries. Neural networks now have the

ability to handle the latency and complex features of these subsets of data.

Deep learning involving artificial neural networks has

classified and characterized raw, unnamed data in the form of pictures, text,

audio, etc., into an organized relational database with appropriate labeling.

For example, deep learning takes thousands of raw

images as input and classifies them based on basic features and characters,

such as all animals such as dogs on one side, inanimate objects such as

furniture in one corner, and all pictures of a family. The third side completes

the whole photo, also known as a smart photo album.

As another example, consider the case of text data

with thousands of emails as input. Here, deep learning categorizes emails into

different categories based on their content: primary email, social email,

promotional email, and spam email.

Feed-forward neural networks: The goal of using neural

networks is to achieve the final result with minimal error and high level of

accuracy.

This procedure involves several steps, each level

involving prediction, error management, and weight update, which slowly moves

to the desired function, increasing the coefficients slightly.

At the starting point of a neural network, we don't

know which weights and subsets of data transform the input into the best

prediction. So, consider any kind of data and subset of weights as a model,

making predictions sequentially to get the best results, and learning from

mistakes each time.

For example, we can refer to neural networks as young

children know nothing about the world around them when they are born and have

no intelligence, but learn from their life experiences and mistakes to become

better humans and intellectuals as they age.

The architecture of the feed-forward network is

represented by the mathematical formula below.

Input * weight = prediction

then,

ground truth - prediction = error

then,

error * weight contribution to error = adjustment

It can be explained here, the input data set is mapped

with coefficients to get multiple predictions for the network.

Now the predictions are compared to the actual facts

taken from the real-time scenario, and the facts end the experience to find the

error rate. Adjustments are made to handle errors and correlate the

contribution of the weights.

These three functions are the three core components of

a neural network that score inputs, evaluate losses, and deploy upgrades to the

model.

So it is a feedback loop that compensates for the

coefficients that support a good prediction and discards the coefficients that

lead to errors.

Handwriting recognition, face and digital signature

recognition, and missing pattern identification are some of the real-time

examples of neural networks.

4) Cognitive Computing

The purpose of this component of artificial

intelligence is to initiate and accelerate interactions to complete complex

tasks and solve problems between humans and machines.

While performing a variety of tasks alongside humans,

machines learn and understand human behavior, emotions in a variety of unique

conditions, and reproduce human thought processes in computer models.

By practicing this, the machine acquires the ability

to understand human language and image reflexes. So, cognitive thinking, along

with artificial intelligence, could create products that could behave like

humans, and could even have data processing capabilities.

Cognitive computing can make accurate decisions for

complex problems. Therefore, it applies to areas where solutions need to be

improved at optimal cost, and is obtained by analyzing natural language and

evidence-based learning.

Google Assistant , for example, is a very large

example of cognitive computing.

5) Natural L anguage Processing

This capability of artificial intelligence allows

computers to interpret, identify, search for, and process human language and

voice.

The concept that introduced this component is to

facilitate the interaction between machine and human language and allow the

computer to provide logical responses to human voices or queries.

Natural language processing refers to active and

passive modes of using algorithms that focus on both the oral and written

sections of human language.

Natural language generation (NLG) processes and

decodes the sentences and words that humans use to speak (oral communication),

while natural language understanding (NLU) emphasizes written vocabulary to

translate the language of text or pixels. machine.

Graphical User Interfaces (GUI)-based applications on

computers are the best example of natural language processing.

Various types of translators that translate one

language into another are examples of natural language processing systems.

Google features in voice assistants and voice search engines are examples of

this.

6) Computer Vision

Computer vision is a very important part of artificial

intelligence as it allows computers to automatically recognize, analyze and

interpret visual data by capturing and intercepting real-world images and

visuals.

It integrates deep learning and pattern recognition

technologies to extract the content of images from given data, including image

or video files such as

PDF documents, Word documents, PPT documents, XL files,

graphs and pictures, etc.

I have a complex image of a bunch of things, and I

assume that just looking at the image and memorizing it is not easily possible

for everyone. Computer vision incorporates a series of transformations on an

image so that bit and byte details can be extracted, such as the sharp edges of

an object, the unusual design or color used, and so on.

This is done using various algorithms by applying

mathematical expressions and statistics. Robots use computer vision technology

to see the world and act in real-time situations.

The application of this component is very widely used

in the medical industry to analyze the health condition of patients using MRI

scans, X-rays, etc. It is also used in the automotive industry dealing with

computer-controlled vehicles and drones.

Conclusion

In this tutorial, I first diagrammed the various

elements of intelligence and their importance in applying it in real-world

situations to achieve the desired results.

We then explored in detail the various subfields of

artificial intelligence and their importance in machine intelligence and the

real world through mathematical representations, real-time applications, and

various examples.

We also learned in detail about the concepts of

machine learning, pattern recognition and neural networks in artificial

intelligence, which play a very important role in all applications of

artificial intelligence.

.jpg)

Comments